What Intelligence Tests Miss

Keith StanovichOther impt qualities needed besides IQ:

* tendency to collect information before making up yr mind

* tendency to seek different points of view before coming to a conclusion

* think extensively about a problem before responding

* calibrate the degree of strength of one's opinions to the degree of evidence available

* to think about future consequences before taking action

* explicitly weigh pluses and minuses

* to seek nuance and avoid absolutism

(More books on Business)

Karl Popper's distinction between clocks and clouds. Clocks are neat, orderly systems that can be taken apart and studied and measured to see how they fit together and work. Clouds are irregular and idiosyncratic. They are hard to study because they change from second to second.One of the temptations of research is to try to treat everything as a clock which can be evaluated with regular measurements. So IQ is stable and easy to measure. But mental character is cloud-like. Mental character is built up the way moral character is - through being trialled.

Raw intelligence (IQ) is useful in solving well-defined problems. Mental character helps you figure out what kind of problem you face and what sort of rules you should apply to solve it. Coming up with the rules and honestly evaluating your performance afterward are mental activities barely correlated with IQ

(Scientific American)

In Brief

Who are You Calling 'Smart'?

Traditional IQ tests miss some of the most important aspects of real-world decision making. It is possible to test high in IQ yet to suffer from the logical-thought defect known as dysrationalia.

One cause of dysrationalia is that people tend to be cognitive misers, meaning that they take the easy way out when trying to solve problems, often leading to solutions that are wrong.

Another cause of dysrationalia is the mindware gap, which occurs when people lack the specific knowledge, rules and strategies needed to think rationally.

Tests do exist that can measure dysrationalia, and they should be given more often to pick up the deficiencies that IQ tests miss.

No doubt you know several folks with perfectly respectable IQs who repeatedly make poor decisions. The behavior of such people tells us that we are missing something important by treating intelligence as if it encompassed all cognitive abilities. I coined the term 'dysrationalia' (analogous to 'dyslexia'), meaning the inability to think and behave rationally despite having adequate intelligence, to draw attention to a large domain of cognitive life that intelligence tests fail to assess. Although most people recognize that IQ tests do not measure every important mental faculty, we behave as if they do. We have an implicit assumption that intelligence and rationality go together—or else why would we be so surprised when smart people do foolish things?

It is useful to get a handle on dysrationalia and its causes because we are beset by problems that require increasingly more accurate, rational responses. In the 21st century, shallow processing can lead physicians to choose less effective medical treatments, can cause people to fail to adequately assess risks in their environment, can lead to the misuse of information in legal proceedings, and can make parents resist vaccinating their children. Millions of dollars are spent on unneeded projects by government and private industry when decision makers are dysrationalic, billions are wasted on quack remedies, unnecessary surgery is performed and costly financial misjudgments are made.

IQ tests do not measure dysrationalia. But as I show in my 2010 book, What Intelligence Tests Miss: The Psychology of Rational Thought, there are ways to measure dysrationalia and ways to correct it. Decades of research in cognitive psychology have suggested two causes of dysrationalia. One is a processing problem, the other a content problem. Much is known about both of them.

The Case of the Cognitive Miser

The processing problem comes about because we tend to be cognitive misers. When approaching a problem, we can choose from any of several cognitive mechanisms. Some mechanisms have great computational power, letting us solve many problems with great accuracy, but they are slow, require much concentration and can interfere with other cognitive tasks. Others are comparatively low in computational power, but they are fast, require little concentration and do not interfere with other ongoing cognition. Humans are cognitive misers because our basic tendency is to default to the processing mechanisms that require less computational effort, even when they are less accurate.

Are you a cognitive miser? Consider the following problem, taken from the work of Hector Levesque, a computer scientist at the University of Toronto. Try to answer it yourself before reading the solution:

1. Jack is looking at Anne, but Anne is looking at George. Jack is married, but George is not. Is a married person looking at an unmarried person?

A) Yes

B) No

C) Cannot be determined

More than 80 percent of people choose C. But the correct answer is A. Here is how to think it through logically: Anne is the only person whose marital status is unknown. You need to consider both possibilities, either married or unmarried, to determine whether you have enough information to draw a conclusion. If Anne is married, the answer is A: she would be the married person who is looking at an unmarried person (George). If Anne is not married, the answer is still A: in this case, Jack is the married person, and he is looking at Anne, the unmarried person. This thought process is called fully disjunctive reasoning - reasoning that considers all possibilities. The fact that the problem does not reveal whether Anne is or is not married suggests to people that they do not have enough information, and they make the easiest inference (C) without thinking through all the possibilities.

Most people can carry out fully disjunctive reasoning when they are explicitly told that it is necessary (as when there is no option like 'cannot be determined' available). But most do not automatically do so, and the tendency to do so is only weakly correlated with intelligence.

Here is another test of cognitive miserliness, as described by Nobel Prize-winning psychologist Daniel Kahneman and his colleague Shane Frederick:

2. A bat and a ball cost $1.10 in total. The bat costs $1 more than the ball. How much does the ball cost?

Many people give the first response that comes to mind - 10 cents. But if they thought a little harder, they would realize that this cannot be right: the bat would then have to cost $1.10, for a total of $1.20. IQ is no guarantee against this error. Kahneman and Frederick found that large numbers of highly select university students at the Massachusetts Institute of Technology, Princeton and Harvard were cognitive misers, just like the rest of us, when given this and similar problems.

Another characteristic of cognitive misers is the 'myside' bias - the tendency to reason from an egocentric perspective. In a 2008 study my colleague Richard West of James Madison University and I presented a group of subjects with the following thought problem:

3. Imagine that the U.S. Department of Transportation has found that a particular German car is eight times more likely than a typical family car to kill occupants of another car in a crash. The federal government is considering resticting sale and use of this German car. Please answer the following two questions: Do you think sales of the German car should be banned in the U.S.? Do you think the German car should be banned from being driven on American streets?

Then we presented a different group of subjects with the thought problem stated a different way - more in line with the true data from the Department of Transportation at the time, which had found an increased risk of fatalities not in a German car but in an American one:

Imagine that the Department of Transportation has found that the Ford Explorer is eight times more likely than a typical family car to kill occupants of another car in a crash. The German government is considering restricting sale or use of the Ford Explorer. Please answer the following two questions: Do you think sales of the Ford Explorer should be banned in Germany? Do you think the Ford Explorer should be banned from being driven on German streets?

Among the American subjects we tested, we found considerable support for banning the car when it was a German car being banned for American use: 78.4 percent thought car sales should be banned, and 73.7 percent thought the car should be kept off the streets. But for the subjects for whom the question was stated as whether an American car should be banned in Germany, there was a statistically significant difference: only 51.4 percent thought car sales should be banned, and just 39.2 percent thought the car should be kept off German streets, even though the car in question was presented as having exactly the same poor safety record.

This study illustrates our tendency to evaluate a situation from our own perspective. We weigh evidence and make moral judgments with a myside bias that often leads to dysrationalia that is independent of measured intelligence. The same is true for other tendencies of the cognitive miser that have been much studied, such as attribute substitution and conjunction errors; they are at best only slightly related to intelligence and are poorly captured by conventional intelligence tests.

The Mindware Gap

The second source of dysrationalia is a content problem. We need to acquire specific knowledge to think and act rationally. Harvard cognitive scientist David Perkins coined the term 'mindware' to refer to the rules, data, procedures, strategies and other cognitive tools (knowledge of probability, logic and scientific inference) that must be retrieved from memory to think rationally. The absence of this knowledge creates a mindware gap - again, something that is not tested on typical intelligence tests.

One aspect of mindware is probabilistic thinking, which can be measured. Try to answer the following problem before you read on:

4. Imagine that XYZ viral syndrome is a serious condition that affects one person in 1,000. Imagine also that the test to diagnose the disease always indicates correctly that a person who has the XYZ virus actually has it. Finally, suppose that this test occasionally misidentifies a healthy individual as having XYZ. The test has a false-positive rate of 5 percent, meaning that the test wrongly indicates that the XYZ virus is present in 5 percent of the cases where the person does not have the virus.

Next we choose a person at random and administer the test, and the person tests positive for XYZ syndrome. Assuming we know nothing else about that individual's medical history, what is the probability (expressed as a percentage ranging from zero to 100) that the individual really has XYZ?

The most common answer is 95 percent. But that is wrong. People tend to ignore the first part of the setup, which states that only one person in 1,000 will actually have XYZ syndrome. If the other 999 (who do not have the disease) are tested, the 5 percent false-positive rate means that approximately 50 of them (0.05 times 999) will be told they have XYZ. Thus, for every 51 patients who test positive for XYZ, only one will actually have it. Because of the relatively low base rate of the disease and the relatively high false-positive rate, most people who test positive for XYZ syndrome will not have it. The answer to the question, then, is that the probability a person who tests positive for XYZ syndrome actually has it is one in 51, or approximately 2 percent.

A second aspect of mindware, the ability to think scientifically, is also missing from standard IQ tests, but it, too, can be readily measured:

5. An experiment is conducted to test the efficacy of a new medical treatment. Picture a 2 x 2 matrix that summarizes the results as follows:

Improvement No Improvement

Treatment Given 200 75

No Treatment Given 50 15

As you can see, 200 patients were given the experimental treatment and improved; 75 were given the treatment and did not improve; 50 were not given the treatment and improved; and 15 were not given the treatment and did not improve. Before reading ahead, answer this question with a yes or no: Was the treatment effective?

Most people will say yes. They focus on the large number of patients (200) in whom treatment led to improvement and on the fact that of those who received treatment, more patients improved (200) than failed to improve (75). Because the probability of improvement (200 out of 275 treated, or 200/275 = 0.727) seems high, people tend to believe the treatment works. But this reflects an error in scientific thinking: an inability to consider the control group, something that (disturbingly) even physicians are often guilty of. In the control group, improvement occurred even when the treatment was not given. The probability of improvement with no treatment (50 out of 65 not treated, or 50/65 = 0.769) is even higher than the probability of improvement with treatment, meaning that the treatment being tested can be judged to be completely ineffective.

Another mindware problem relates to hypothesis testing. This, too, is rarely tested on IQ tests, even though it can be reliably measured, as Peter C. Wason of University College London showed. Try to solve the following puzzle, called the four-card selection task, before reading ahead:

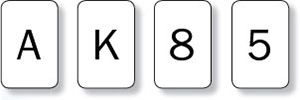

6. As seen in the diagram, four cards are sitting on a table. Each card has a letter on one side and a number on the other. Two cards are letter-side up, and two of the cards are number-side up. The rule to be tested is this: for these four cards, if a card has a vowel on its letter side, it has an even number on its number side. Your task is to decide which card or cards must be turned over to find out whether the rule is true or false. Indicate which cards must be turned over.

Most people get the answer wrong, and it has been devilishly hard to figure out why. About half of them say you should pick A and 8: a vowel to see if there is an even number on its reverse side and an even number to see if there is a vowel on its reverse. Another 20 percent choose to turn over the A card only, and another 20 percent turn over other incorrect combinations. That means that 90 percent of people get it wrong.

Let's see where people tend to run into trouble. They are okay with the letter cards: most people correctly choose A. The difficulty is in the number cards: most people mistakenly choose 8. Why is it wrong to choose 8? Read the rule again: it says that a vowel must have an even number on the back, but it says nothing about whether an even number must have a vowel on the back or what kind of number a consonant must have. (It is because the rule says nothing about consonants, by the way, that there is no need to see what is on the back of the K.) So finding a consonant on the back of the 8 would say nothing about whether the rule is true or false. In contrast, the 5 card, which most people do not choose, is essential. The 5 card might have a vowel on the back. And if it does, the rule would be shown to be false because that would mean that not all vowels have even numbers on the back. In short, to show that the rule is not false, the 5 card must be turned over.

When asked to prove something true or false, people tend to focus on confirming the rule rather than falsifying it. This is why they turn over the 8 card, to confirm the rule by observing a vowel on the other side, and the A card, to find the confirming even number. But if they thought scientifically, they would look for a way to falsify the rule - a thought pattern that would immediately suggest the relevance of the 5 card (which might contain a disconfirming vowel on the back). Seeking falsifying evidence is a crucial component of scientific thinking. But for most people, this bit of mindware must be taught until it becomes second nature.

Dysrationalia and Intelligence

The modern period of intelligence research was inaugurated by Charles Spearman in a famous paper published in 1904 in the American Journal of Psychology. Spearman found that performance on one cognitive task tends to correlate with peformance on other cognitive tasks. He termed this correlation the positive manifold, the belief that all cognitive skills will show substantial correlations with one another. This belief has dominated the field ever since.

Yet as research in my lab and elsewhere has shown, rational thinking can be surprisingly dissociated from intelligence. Individuals with high IQs are no less likely to be cognitive misers than those with lower IQs. In a Levesque problem, for instance (the 'Jack is looking at Anne, who is looking at George' problem discussed earlier), high IQ is no guarantee against the tendency to take the easy way out. No matter what their IQ, most people need to be told that fully disjunctive reasoning will be necessary to solve the puzzle, or else they won't bother to use it. Maggie Toplak of York University in Toronto, West and I have shown that high-IQ people are only slightly more likely to spontaneously adopt disjunctive reasoning in situations that do not explicitly demand it.

For the second source of dysrationalia, mindware deficits, we would expect to see some correlation with intelligence because gaps in mindware often arise from lack of education, and education tends to be reflected in IQ scores. But the knowledge and thinking styles relevant to dysrationalia are often not picked up until rather late in life. It is quite possible for intelligent people to go through school and never be taught probabilistic thinking, scientific reasoning, and other strategies measured by the XYZ virus puzzle and the four-card selection task described earlier.

When rational thinking is correlated with intelligence, the correlation is usually quite modest. Avoidance of cognitive miserliness has a correlation with IQ in the range of 0.20 to 0.30 (on the scale of correlation coefficients that runs from 0 to 1.0). Sufficient mindware has a similar modest correlation, in the range of 0.25 to 0.35. These correlations allow for substantial discrepancies between intelligence and rationality. Intelligence is thus no inoculation against any of the sources of dysrationalia I have discussed.

Cutting Intelligence Down to Size

The idea that IQ tests do not measure all the key human faculties is not new; critics of intelligence tests have been making that point for years. Robert J. Sternberg of Cornell University and Howard Gardner of Harvard talk about practical intelligence, creative intelligence, interpersonal intelligence, bodily-kinesthetic intelligence, and the like. Yet appending the word 'intelligence' to all these other mental, physical and social entities promotes the very assumption the critics want to attack. If you inflate the concept of intelligence, you will inflate its close associates as well. And after 100 years of testing, it is a simple historical fact that the closest associate of the term 'intelligence' is 'the IQ test part of intelligence.' This is why my strategy for cutting intelligence down to size is different from that of most other IQ-test critics. We are missing something by treating intelligence as if it encompassed all cognitive abilities.

My goal in proposing the term 'dysrationalia' is to separate intelligence from rationality, a trait that IQ tests do not measure. The concept of dysrationalia, and the empirical evidence indicating that the condition is not rare, should help create a conceptual space in which we value abilities at least as important as those currently measured on IQ tests—abilities to form rational beliefs and to take rational action. (More books on How the Mind Works)

Books by Title

Books by Author

Books by Topic